On Thursday, May 25, Brandon Jackson, a software engineer in Baltimore County, Maryland, discovered that he was locked out of his Amazon account. Jackson couldn’t get packages delivered to his home by the retail giant. He couldn’t access any files and data he had stored with Amazon Web Services, the company’s powerful cloud computing wing. It also meant that Jackson, a self-described home automation enthusiast, could no longer use Alexa for his smart home devices. He could turn on his lights manually, but only in the knowledge that Amazon could still operate them remotely.

Jackson soon discovered that Amazon suspended his account because a Black delivery driver who’d come to his house the previous day had reported hearing racist remarks from his video doorbell. In a brief email sent to Jackson at 3 a.m., the company explained how it unilaterally placed all of his linked devices and services on hold as it commenced an internal investigation.

The accusations baffled Jackson. He and his family are Black. When he reviewed the doorbell’s footage, he saw that nobody was home at the time of the delivery. At a loss for what could have prompted the accusation of racism, he suspected the driver had misinterpreted the doorbell’s automated response: “Excuse me, can I help you?”

Submitting the surveillance video “appeared to have little impact on [Amazon’s] decision to disable my account,” Jackson explained on his blog on June 4. “In the end, my account was unlocked on Wednesday [May 31, six days later], with no follow-up to inform me of the resolution.” By now, many months later, Amazon’s investigation into the matter appears to have concluded though the issue remains far from resolved. Contacted for a response, the company wrote: “In this case, we learned through our investigation that the customer did not act inappropriately, and we’re working directly with the customer to resolve their concerns while also looking at ways to prevent a similar situation from happening again.”

It was only Jackson’s technical skills and particular automated home setup that saved him from what could have been a larger lockout. “My home was fine as I just used Siri or [a] locally hosted dashboard if I wanted to change a light’s color or something of that nature,” he explained. His week of digital exile amounted to a frustrating inconvenience only because, as a tech-savvy user and professional software engineer, he had the ability to set up his own locally hosted network that acted as a failsafe. But Jackson’s experience is a warning to the vast majority of Alexa users and smart home dwellers who, lacking his particular skills and foresight, are increasingly at the mercy of the tech they have embedded into their lives and bedrooms.

“I came forward,” Jackson told Tablet, “because I don’t think it’s right that Amazon could say, ‘I know you bought all these devices, but we think you are racist. So we’re going to take [you] offline.’” On one side, critics lambasted Jackson as a dupe for having smart devices in the first place; others said his criticisms of Amazon implied that he didn’t support a company protecting its employees. “People missed the main point,” he said. “I don’t really care who you are, what you do, or what you believe in. If you bought something, you should own it.”

Jackson’s story of being temporarily canceled by the tech behemoth spread across the internet after it was discussed in a YouTube video by Louis Rossman, a right-to-repair activist, independent technician, and popular YouTube personality. Right to repair, or fair repair, is a consumer-focused movement advocating for the public to be able to repair the equipment they own instead of being forced to use the manufacturer’s repair services or upgrade products that have been arbitrarily made obsolete. In the early 20th century, fair-repair advocacy began with automobiles and heavy machinery, but its tenets have spread as computer chips have come to undergird contemporary life.

Following Rossman’s initial video about Jackons’s case, Amazon alleged that Rossman had abused its affilate marketing program and placed restrictions on the YouTuber’s business account, leading him to speculate in a follow-up video that the corporate giant was retaliating against him for covering Jackson’s travails. Rossman alleges that this was the first time Amazon made any allegation against him of abusing its affiliate marketing program since he enrolled in the marketing program 7.5 years ago.

Jackson’s experience is a warning to Alexa users and smart home dwellers who are increasingly at the mercy of the tech they have embedded into their lives and bedrooms.

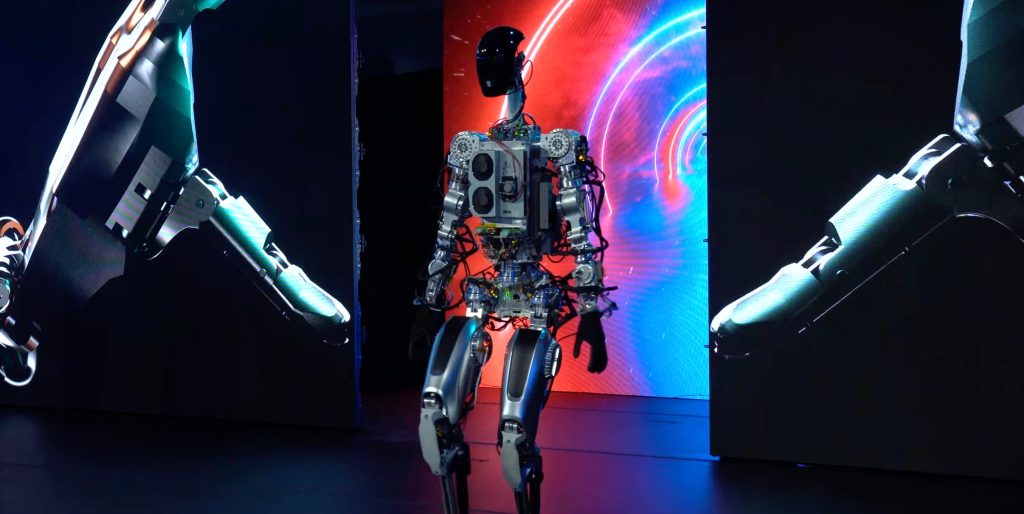

The number of households adopting smart home devices in the United States is expected to reach 93 million by 2027 and most consumers rely on cloud services for their daily online use. But the cloud is not just a metaphor to explain a connected network; it describes the complete reorganization of digital life under the power of remote centralized databases. Light switches, lightbulbs, locks, thermostats, coffee makers, air conditioners, speakers, exercise equipment, and virtually every other piece of equipment you can find in the average home can now all be operated as interconnected pieces of a single digital network, run by an outside host, such as Amazon, which operates the massive server banks that make up “the cloud.” For consumers, this arrangement offers convenience and optimization. You can turn on the heat in your house from another state, or reorder a household good with a simple voice command. But the cost of that convenience is that consumers no longer independently control how their tech—or their homes, since the two are increasingly integrated—is operated. As Kyle Wiens, CEO of iFixit and another right-to-repair activist put it, “Who really owns our things? It used to be us.”

Brandon Jackson

Alexa’s terms of use includes a clause stating that Amazon is permitted to terminate “access” to Alexa at the company’s discretion without notice. Jackson was told by a customer relations executive over the phone that he needed to assure the company that he would not ridicule or put future delivery drivers in harm’s way. Nearly a month later, Amazon admitted no wrongdoing, only apologizing for “inconveniences.” Given absolute power over its users, there is no pressure on Amazon to explain its decision. Indeed, the company used the same statement Tabletreceived for an earlier June Newsweek article regarding Jackson’s lockout.

Amazon’s claims of being concerned about the safety of blue-collar workers strain credibility. According to a 2021 article published in Vice, when minority delivery drivers faced violent threats and racial harassment, the company’s penchant for efficiency took priority over worker safety. Unsustainable demands from delivery drivers have translated to drivers peeing in bottles and defecating in garbage bags, a problem Amazon internally acknowledged even as it publicly denies the allegations. Inside its “fulfillment centers”—the term the company uses for its warehouses—workers suffer 5.9 serious injuries for every 100 workers, an 80% greater injury rate than competitors. Indeed employee turnover is so high in these facilities that a leaked company memo from 2022 warned that the company was on track to deplete its number of available workers by 2024.

Amazon’s intrusion into Jackson’s life, then, should not be understood within the context of protecting workers—which might begin by giving them adequate time to use the restroom—but rather as part of an emergent regime of technological control. The culmination of years of debate about political and civic norm moderation on social media and in public discourse has created a new normative standard in which “innocent until proven guilty” is now viewed as an oppressive and antiquated relic. As the new unelected masters of public discourse, tech giants like Amazon, Google, Twitter, and Facebook, have been encouraged to execute summary punishments of users for mere accusations of racism or “disinformation.”

Amazon’s enormous power in the global economy and ubiquitous presence in the U.S. supply chain and cloud computing sectors allows the company to take the power of surveillance and cancellation even further. Unlike purely social media companies like X (formerly known as Twitter), Amazon’s suite of smart home gadgets and services gives it a direct physical presence inside of people’s homes. That means that when Amazon wades into cultural issues, or decides to punish people based on offensive speech, its political values are mapped onto objects and processes used in the real world.

In Jackson’s case, in order to regain access to things he had already paid for, he was forced to submit the surveillance video from his home to Amazon to prove his innocence. Somehow, in the new cloud-based networked world these corporations are building for us, the solution to every problem always involves individuals handing over more of their private data.

Debates over censorship, free speech and its limits typically revolve around social media use. But Hayley Tsukayama, a senior legislative activist for Electronic Frontier Foundation, a digital rights group, suggested to Tablet that Jackson’s case shared a similar architecture to conversations around content moderation. Companies can choose not to allow certain forms of speech, but in doing so they can no longer be treated as neutral platforms. Tsukayama argues that social media users are offered a recourse, even if the process is stacked against them. “If [Amazon] is going to look at customer behavior as being part of the terms of service,” she said, “they [should] make that clear and set up a process that’s perhaps not unlike what we see at Facebook, YouTube or others who deal with content takedown.”

But, of course, we now know that millions of social media users had their accounts censored or banned without explanation or recourse for posts, including many that were classified as “disinformation” at the time of the alleged offense but contained statements that authorities later acknowledged as true. In that light, placing more trust in a content moderation model seems like a dangerous gamble. It could also lead to even more surveillance online as companies like Amazon claim a need to monitor their customers’ every move so they can judge them “fairly.”

Like many digital technologies, the smart home offers connectivity at a steep price—it makes individuals passive subjects of the products that surround them, including the things they own. Few of us have any real understanding of the “terms of service” on the devices and services that we rely on. Consider how streaming services replaced physical media and how the arrival of smartphones, with all their wonders, also meant that the owners of such phones became incapable of replacing their own batteries, SIM cards, and physical storage. If we ponder that relationship for a moment, we might conclude that many of the things that we believe we control are really on loan as a means of controlling us.