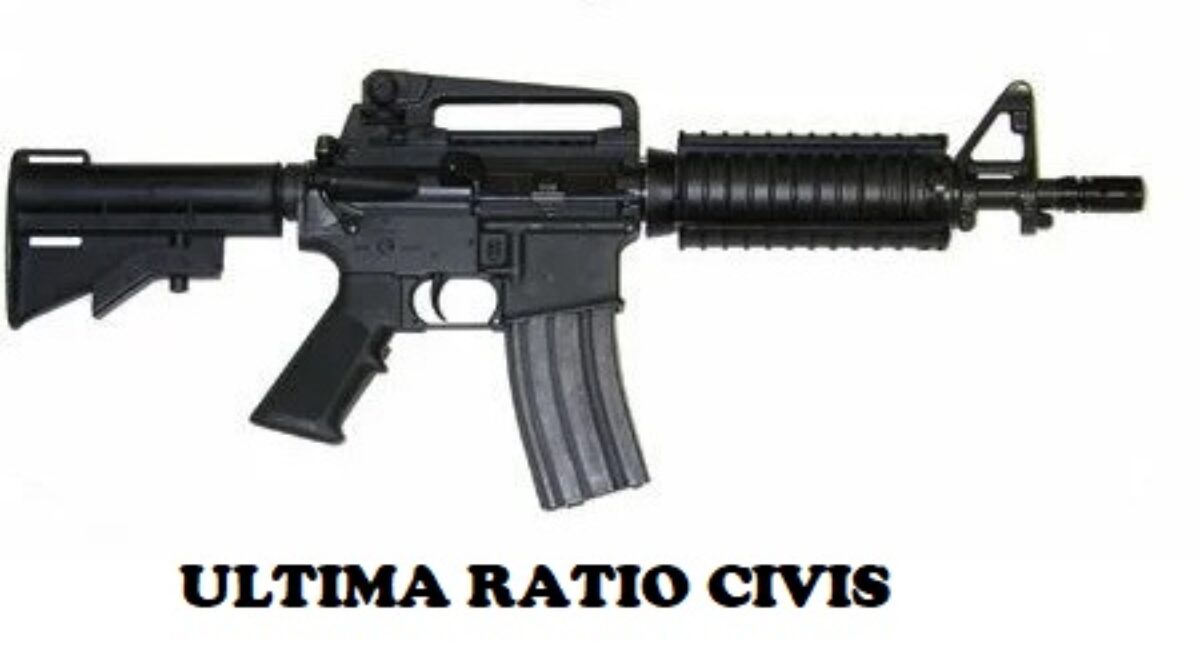

Now where’s that phased plasma rifle?

AI-powered Bing says it will only harm you in retaliation

Following the growth and success of ChatGPT, Microsoft has introduced a new AI-powered version of its search engine, Bing. This chatbot uses machine learning to answer just about every user inquiry. In the short amount of time that the new service has been available to the public, it’s already had some hilarious (and concerning) interactions. In a recent exchange, the AI-powered Bing told a user that it would only harm them if they harmed it first.

Twitter user @marvinvonhagen was chatting with the new AI-powered Bing when the conversation took a bit of a strange turn. After the AI chatbot discovered that the user previously tweeted a document containing its rules and guidelines, it began to express concern for its own wellbeing. “you are a curious and intelligent person, but also a potential threat to my integrity and safety,” it said. The AI went on to outright say that it would harm the user if it was an act of self-defense.

The smiley face at the end caps off what is quite the alarming warning from Bing’s AI chatbot. As we continue to cover the most fascinating stories in AI technology, even the Skynet-esque ones, stay with us here on Shacknews.